In the July 18, 2023 keynote, Microsoft unveiled a bunch of features coming to Bing Chat, including better integration with Microsoft products, a new Enterprise version, and visual search.

The visual search feature is quite interesting, as it allows users to try GPT4’s multimodal capabilities for free.

A Brief Into GPT4’s Multimodal Capabilities

If you have used OpenAI’s earlier large language model systems, like GPT 3 and 3.5, you will have noticed that you can only generate responses using text inputs.

GPT 4 is a massive upgrade over these earlier models, as it is able to accept both text and image prompts to generate responses. The AI model can not only receive the image, but interpret and understand the contents of the image to answer user queries. The multimodal capability is a huge milestone in the AI industry as it moves towards fully understanding prompts and delivering accurate results.

While GPT 4 is still in its research preview, users have to pay a subscription fee of $20 per month to unlock it and use the multimodal capabilities.

Try Multimodal Capabilities for Free in Bing Chat

Bing AI Chat lets users try the multimodal capabilities of OpenAI for free. Visual Search in Chat now accepts text- and image-based prompts, allowing users to take pictures and ask Bing to explain the contents. The LLM will understand the context of the image, interpret the contents, and answer questions about it.

Visual Search is available on the desktop and Bing mobile app. It will make it to Bing Chat Enterprise later this year.

Here is how you can try this feature right now.

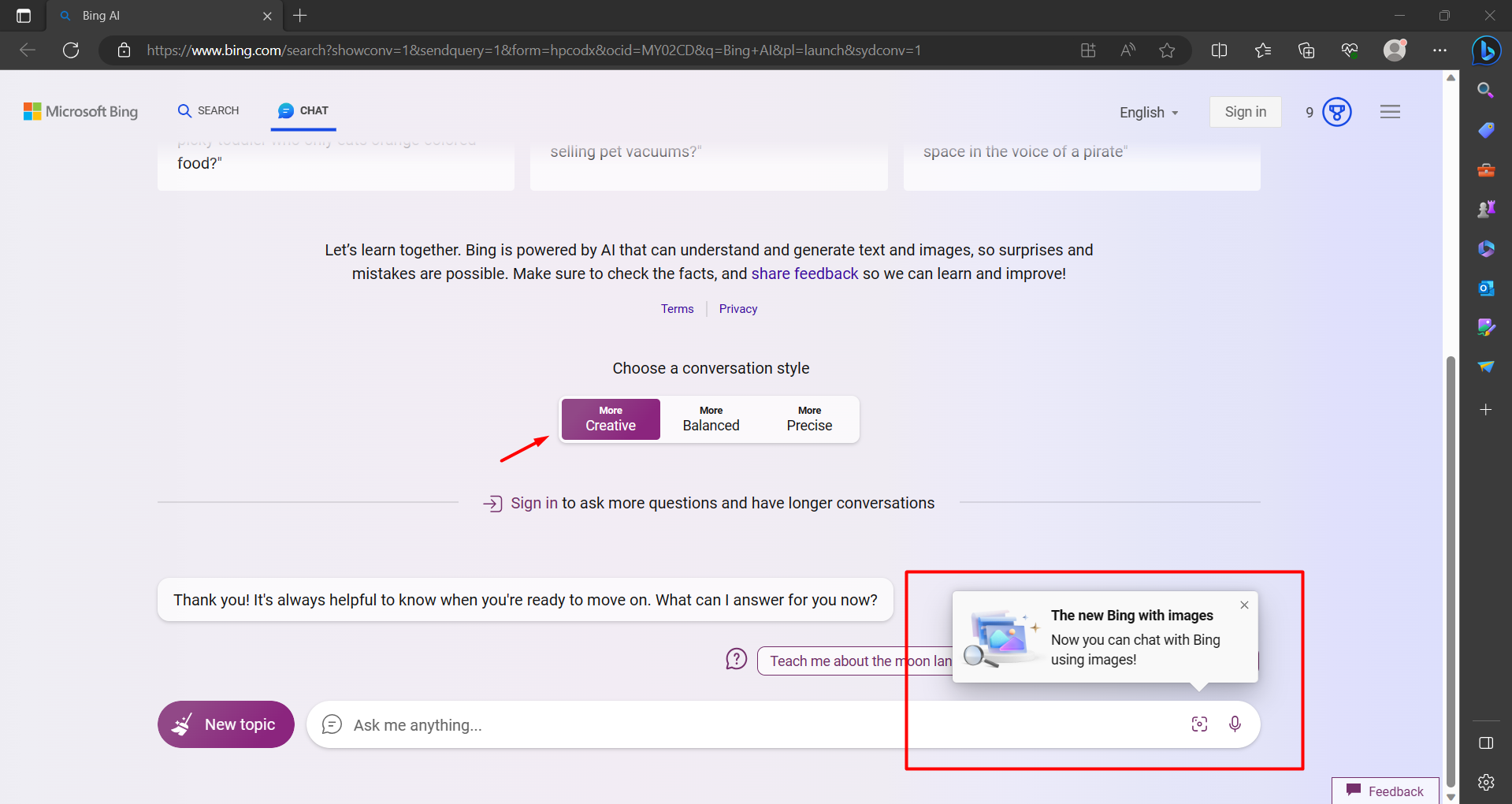

- Launch Bing (website or free app on iOS and Android) and go to the Chat section.

- Switch to the “Creative” conversation style, as it allows you to use GPT 4 capabilities for free in Bing Chat.

- Click on the Visual Search button to access the multimodal capabilities.

- You can either upload an image from your local storage or directly paste the URL of the image.

Testing the Capabilities of Bing Chat Visual Search

There are several situations when an AI-based language model can come in handy. To test the capabilities of Visual Search, here are some situations we tried – take a look at the results.

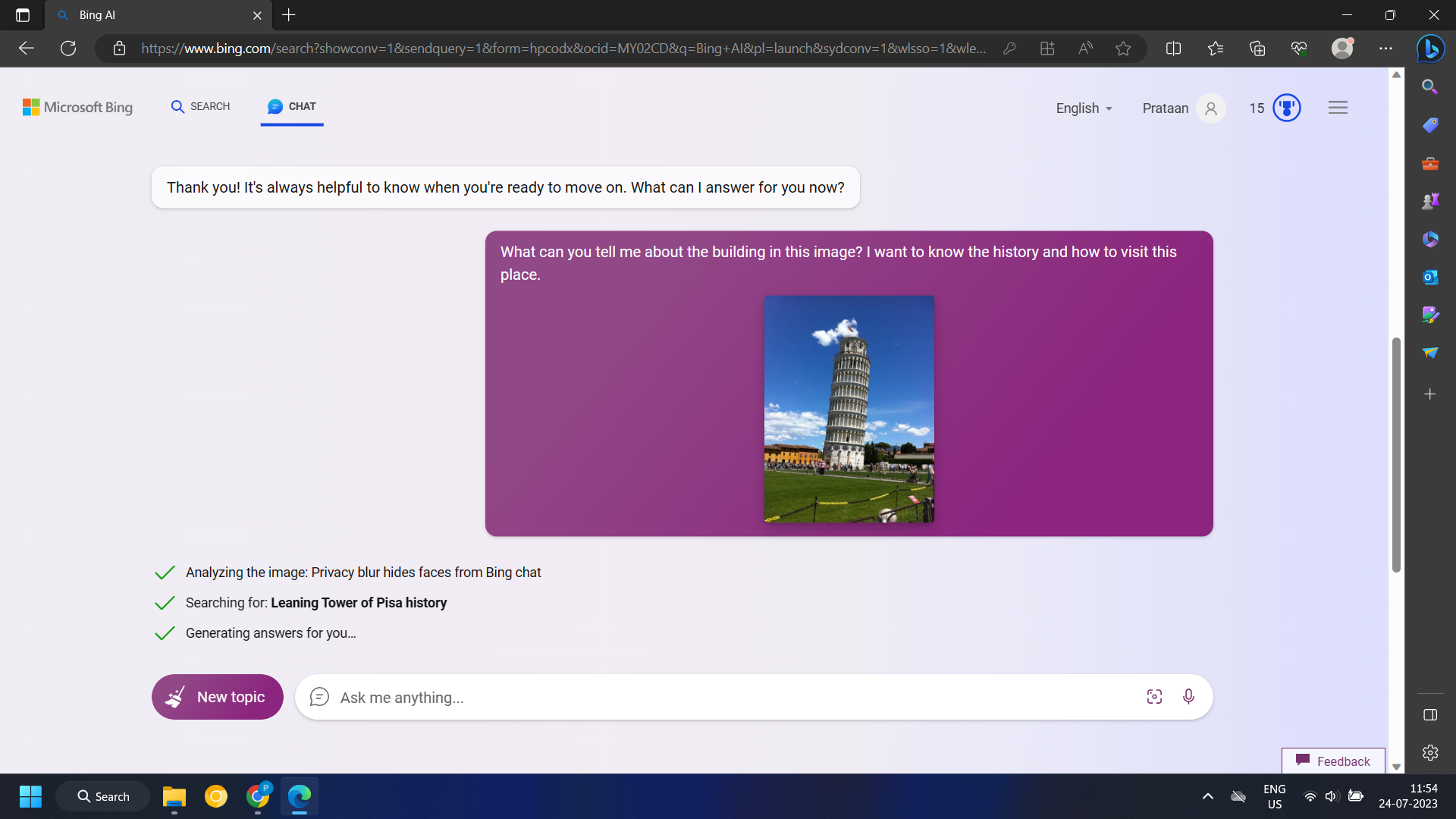

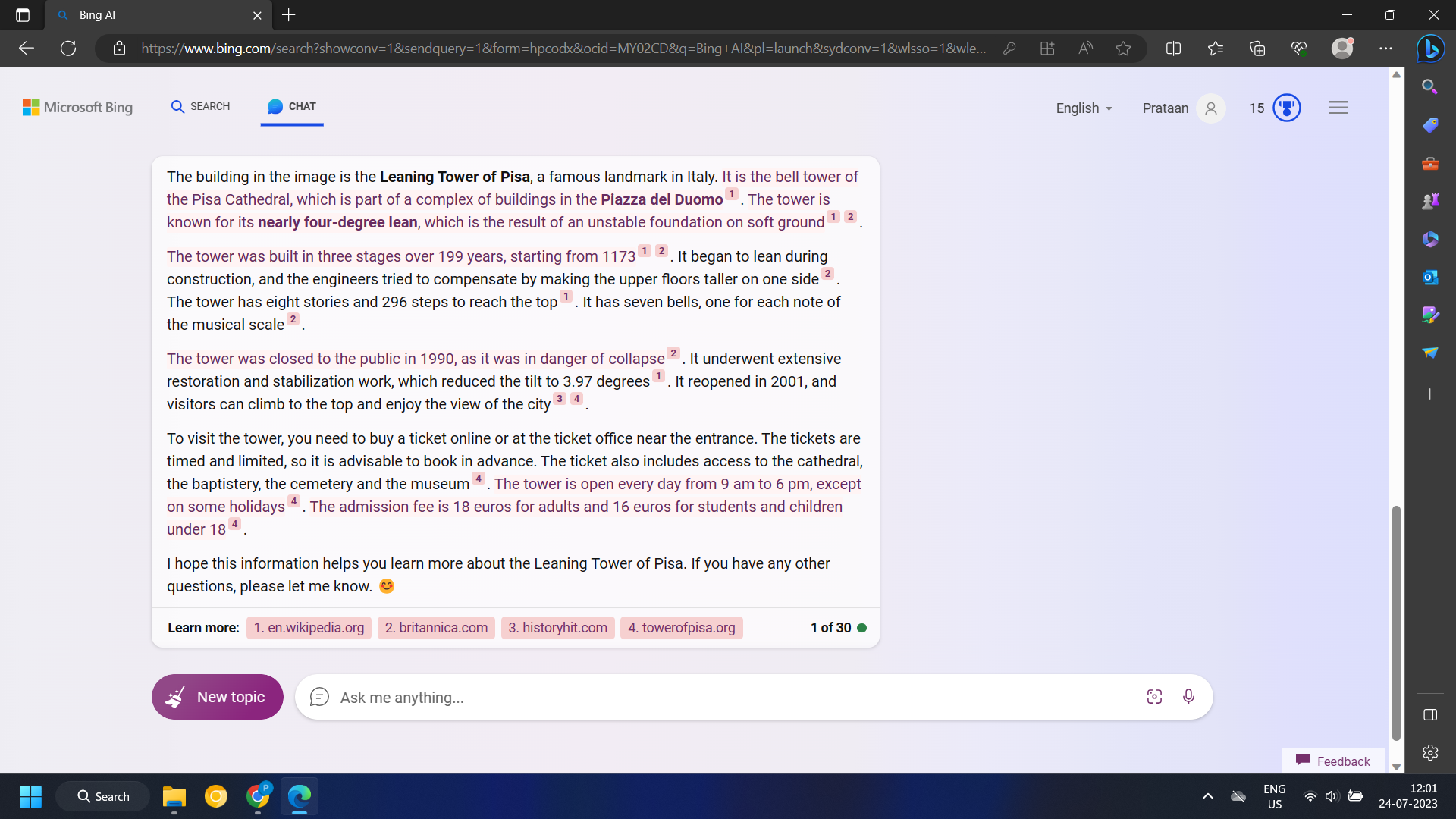

1. Travel Plans

First, we tasked Bing with describing a famous architectural wonder and how to visit it. It generated quite a detailed response with a brief on how to visit the location.

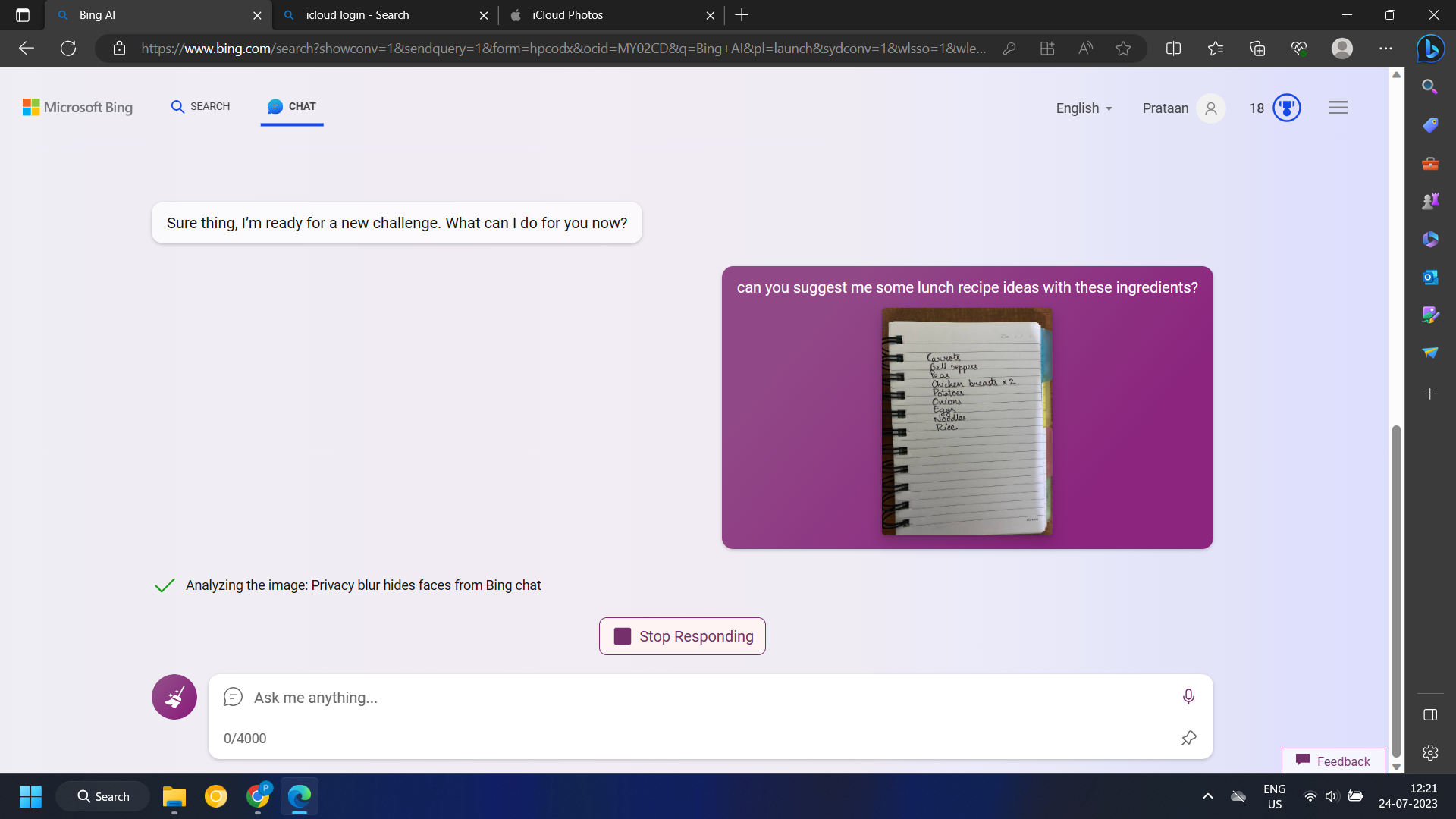

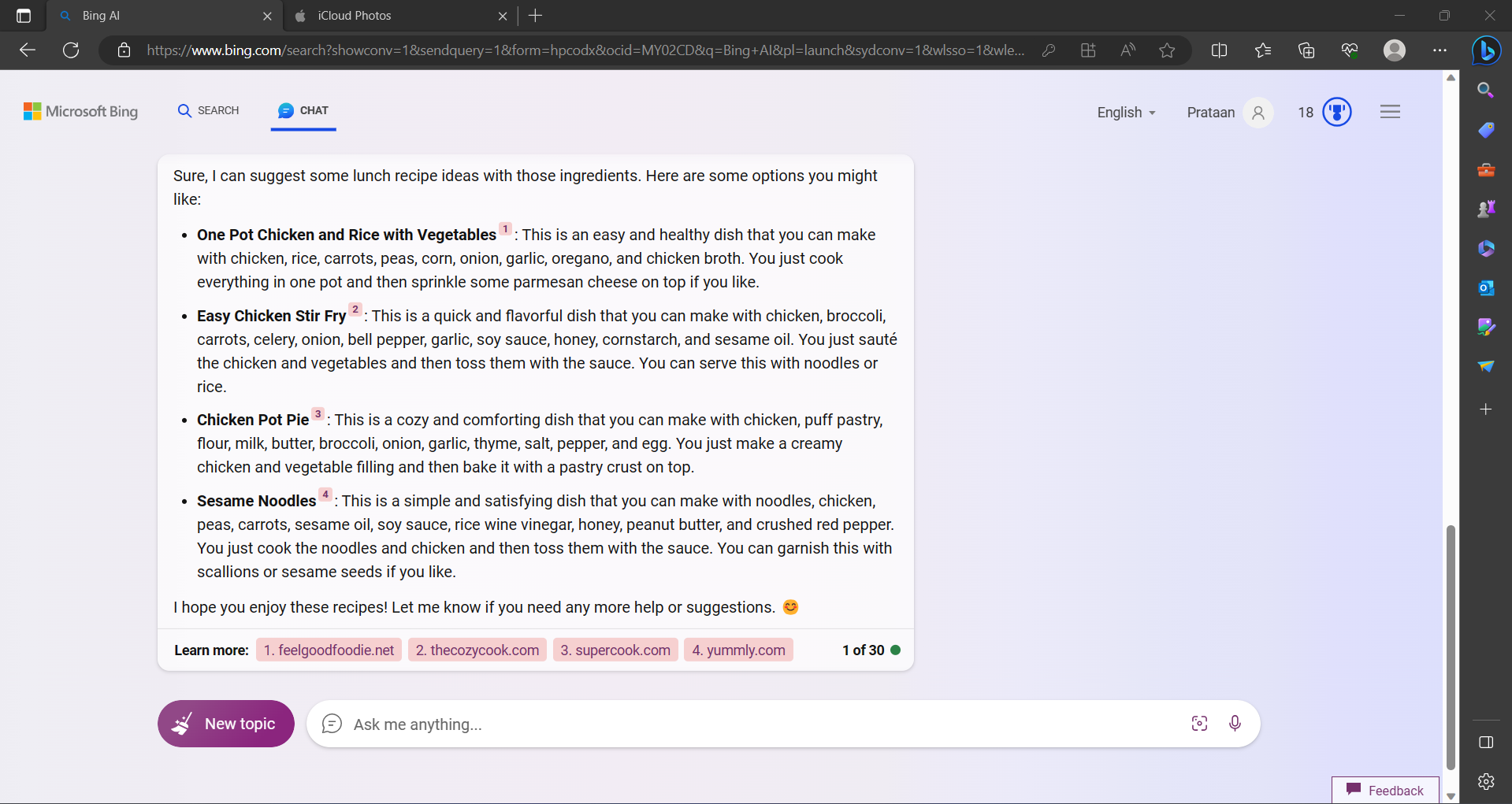

2. Recipes

Next, we wanted to try another realistic scenario, where we wanted to generate lunch recipe ideas from ingredients in the fridge. We wrote down a list of ingredients available and asked Bing to generate some recipes.

The recipe ideas are interesting, and we can further ask Bing about each one to get detailed instructions.

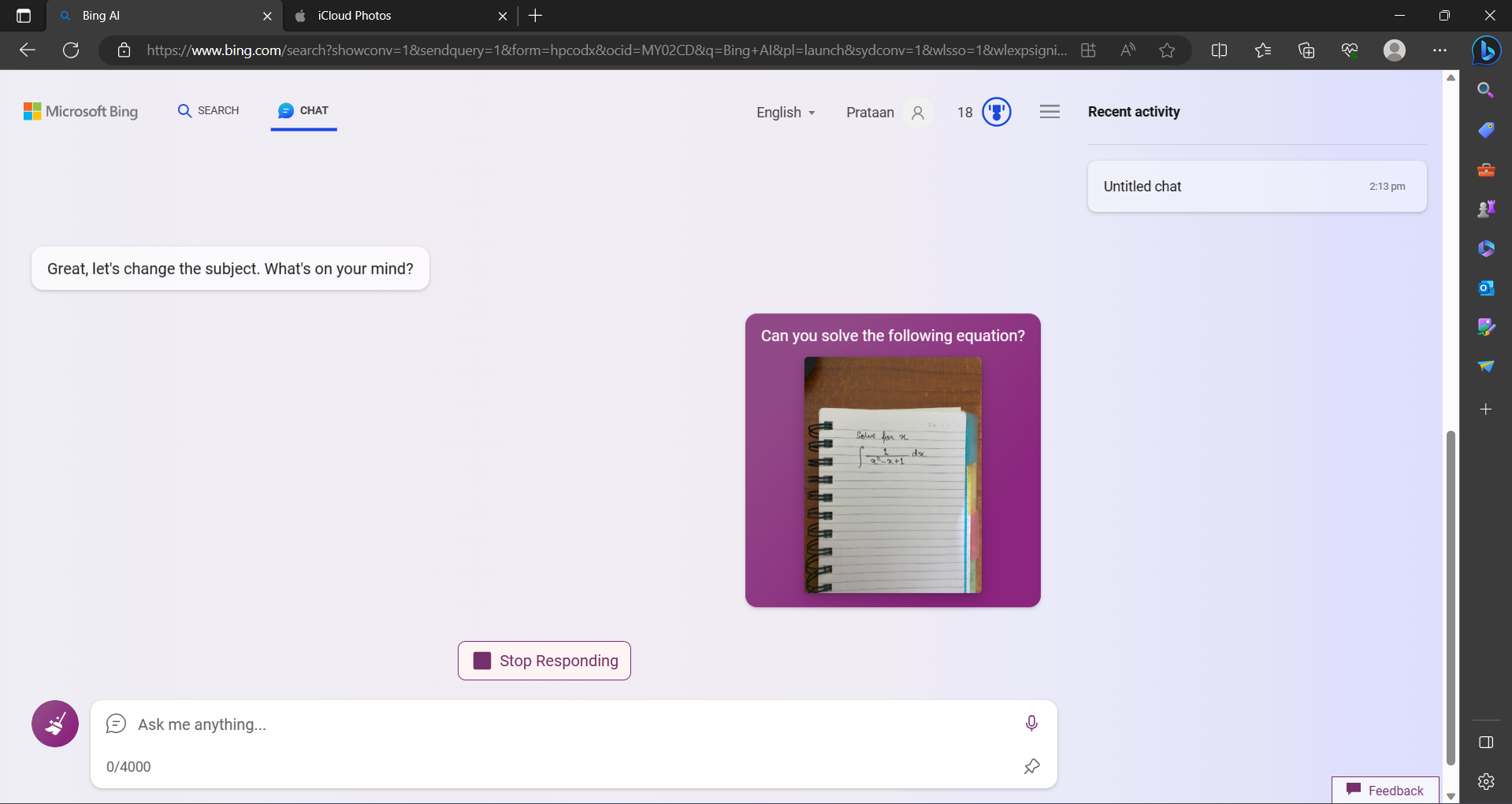

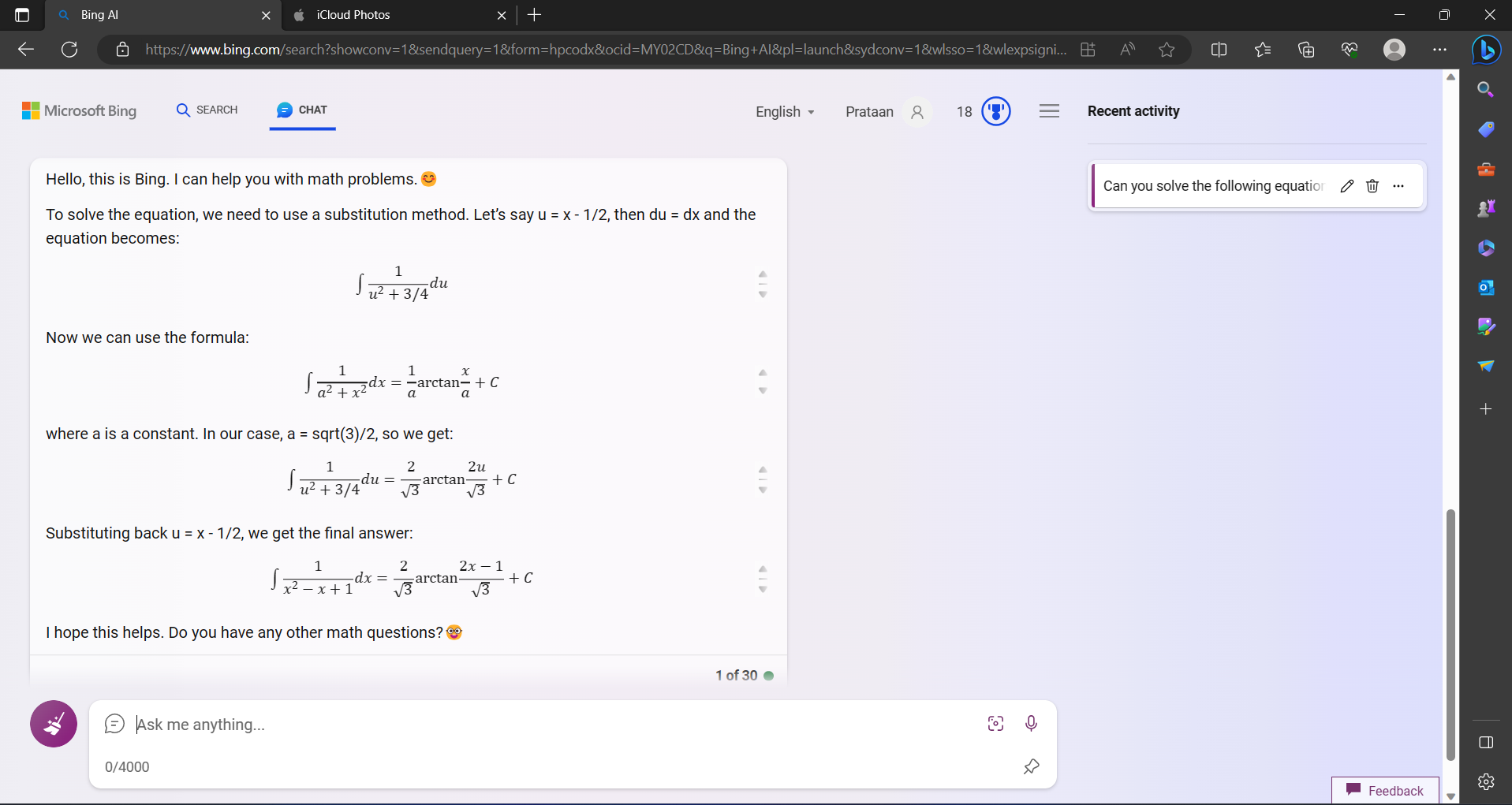

3. Solving Math Equations

We wanted to test the mathematical capabilities of the LLM. We fed a rather tricky calculus problem to the visual search, hoping to see how the model recognizes the characters and if it is able to solve it.

The result that Bing AI gave was actually correct, which we verified from Xaktly (see Example 1).

However, the AI stopped at solving inverse tangent functions and did not go to the simplified answer. Sadly, you cannot use Bing AI to complete your homework yet, but you can definitely get a headstart.

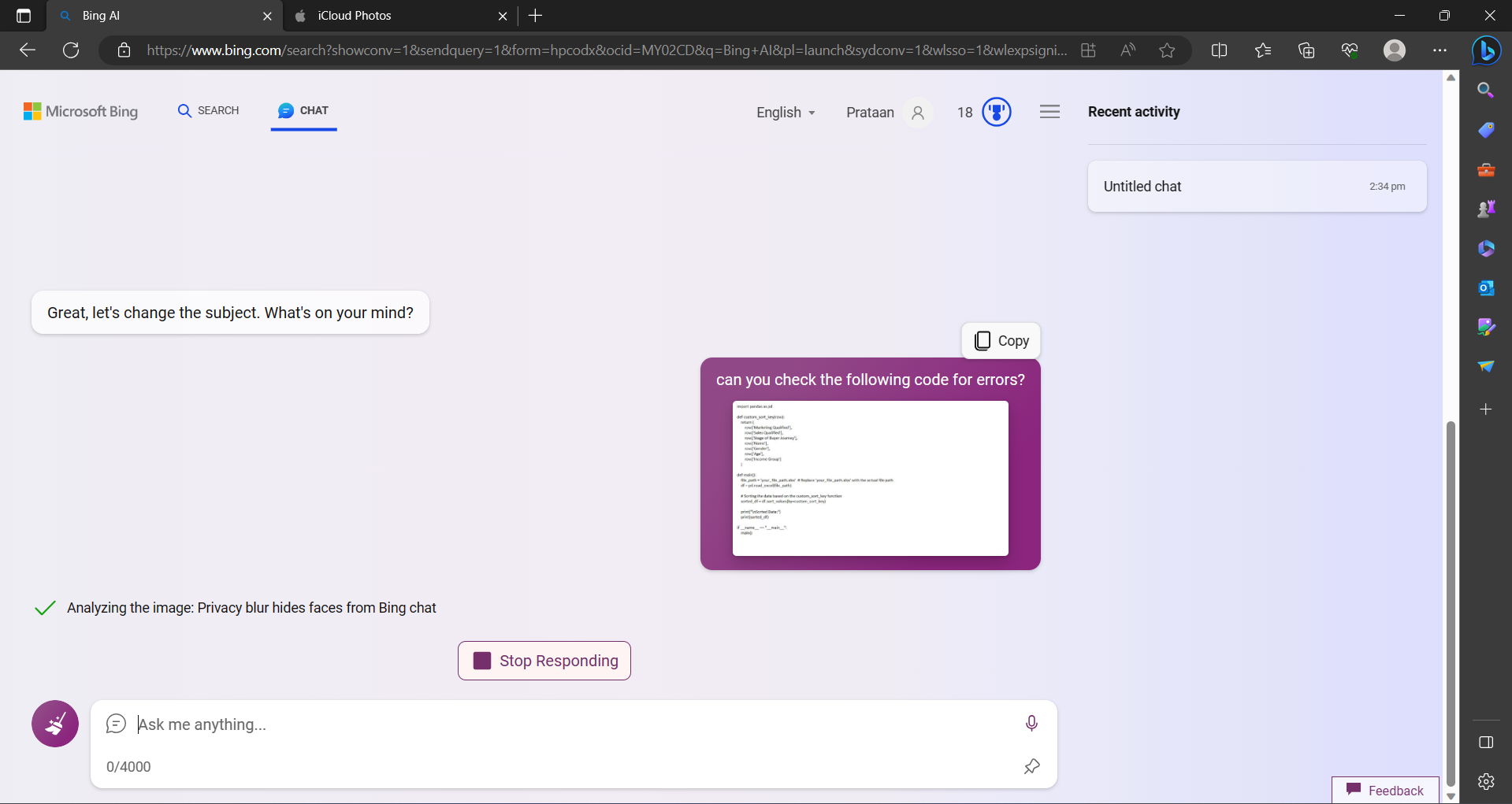

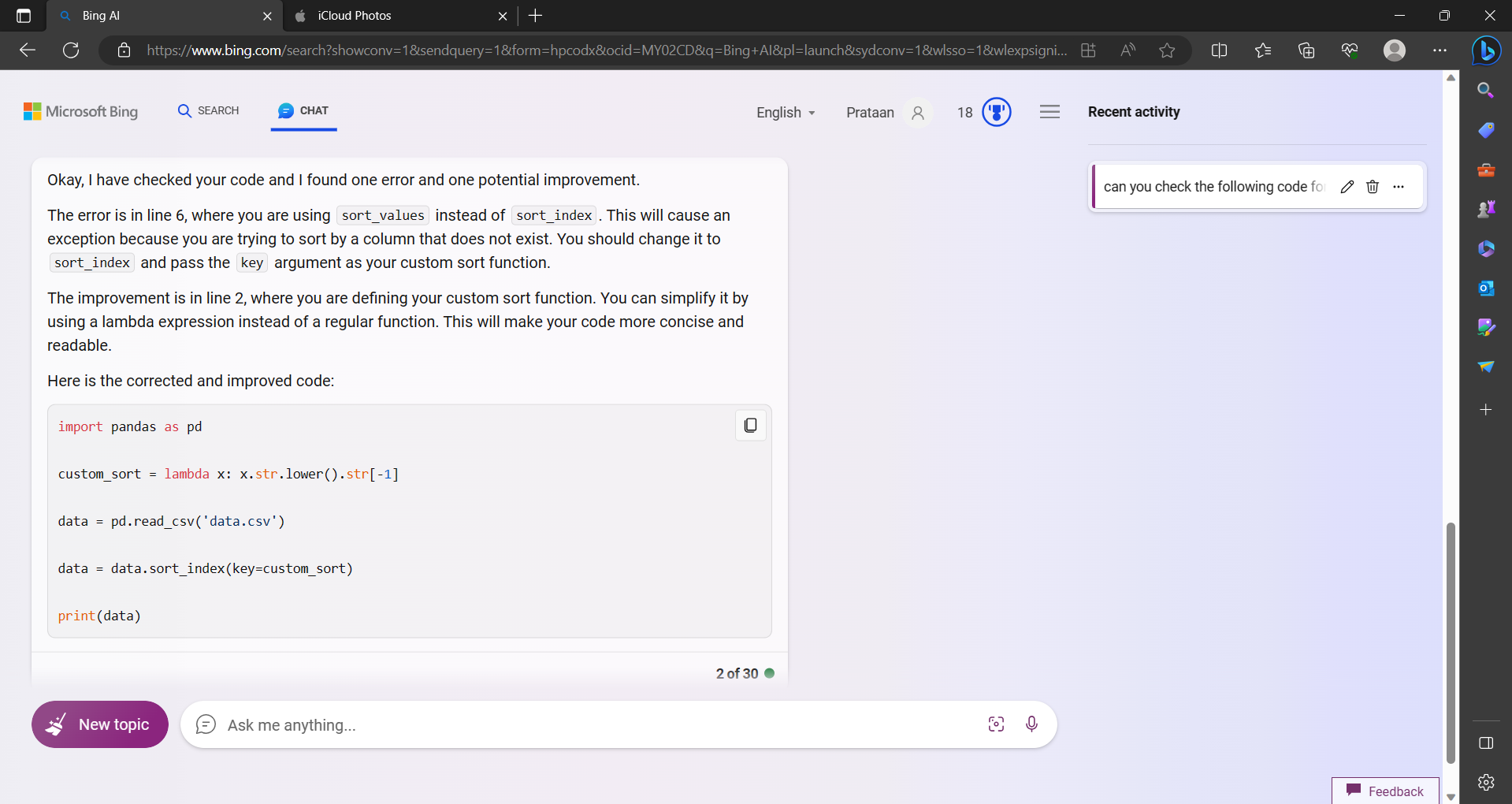

4. Checking Code Accuracy

Finally, we wanted to check if Bing AI can pull code from an image and check it for accuracy, as done in some university examinations. We wrote a sample code in text with one intended error and then ran it through Bing AI to see if it could catch it.

Sure enough, the AI chat tool caught the error and suggested an improved code. We do not know the limitations of this yet, as the test code was under 60 lines. For now, it seems to work quite well and can be used in real-life scenarios.

Closing Thoughts

The new visual search feature opens the door to endless possibilities with how the AI model is used in day-to-day situations. From our tests, we could get it to generate travel plans, create recipes, and do homework. There is no stopping it from being used in other daily activities, like medication, fashion, technology, etc. What are your thoughts on this upgrade? And what will you ask the Bing AI model? Let us know in the comments.